Feb 18 2025 36 mins 5

Fluss is a compelling new project in the realm of real-time data processing. I spoke with Jark Wu, who leads the Fluss and Flink SQL team at Alibaba Cloud, to understand its origins and potential. Jark is a key figure in the Apache Flink community, known for his work in building Flink SQL from the ground up and creating Flink CDC and Fluss.

You can read the Q&A version of the conversation here, and don’t forget to listen to the podcast.

What is Fluss and its use cases?

Fluss is a streaming storage specifically designed for real-time analytics. It addresses many of Kafka's challenges in analytical infrastructure. The combination of Kafka and Flink is not a perfect fit for real-time analytics; the integration of Kafka and Lakehouse is very shallow. Fluss is an analytical Kafka that builds on top of Lakehouse and integrates seamlessly with Flink to reduce costs, achieve better performance, and unlock new use cases for real-time analytics.

How do you compare Fluss with Apache Kafka?

Fluss and Kafka differ fundamentally in design principles. Kafka is designed for streaming events, but Fluss is designed for streaming analytics.

Architecture Difference

The first difference is the Data Model. Kafka is designed to be a black box to collect all kinds of data, so Kafka doesn't have built-in schema and schema enforcement; this is the biggest problem when integrating with schematized systems like Lakehouse. In contrast, Fluss adopts a Lakehouse-native design with structured tables, explicit schemas, and support for all kinds of data types; it directly mirrors the Lakehouse paradigm. Instead of Kafka's topics, Fluss organizes data into database tables with partitions and buckets. This Lakehouse-first approach eliminates the friction of using Lakehouse as a deep storage for Fluss.

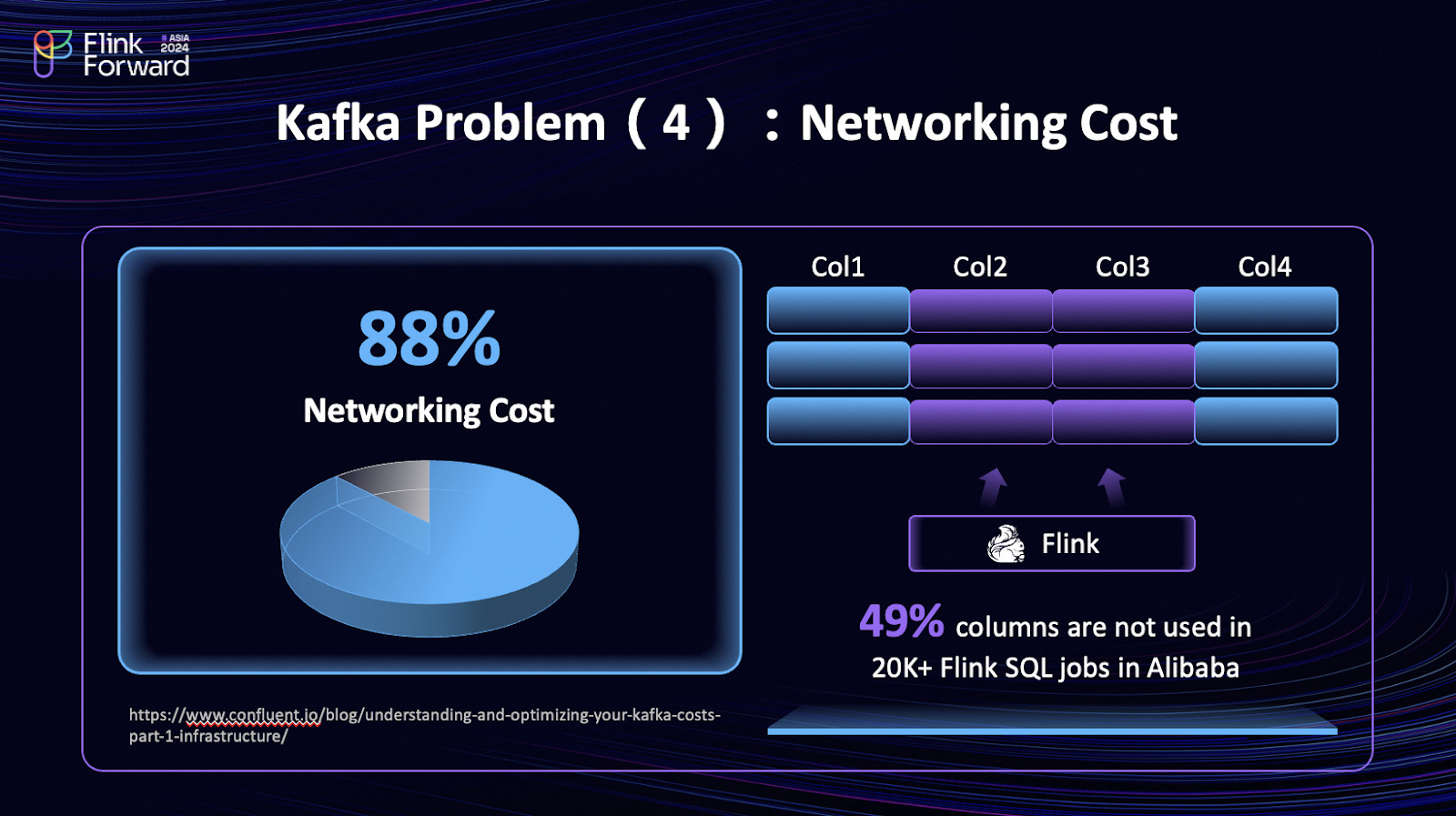

The second difference is the Storage Model. Fluss introduces Apache Arrow as its columnar log storage model for efficient analytical queries, whereas Kafka persists data as unstructured and row-oriented logs for efficient sequence scans. Analytics requires strong data-skipping ability in storage, so sequence scanning is not common; columnar pruning and filter pushdown are basic functionalities of analytical storage. Among the 20,000 Flink SQL jobs at Alibaba, only 49% of columns of Kafka data are read on average.

The third difference is Data Mutability: Fluss natively supports real-time updates (e.g., row-level modifications) through LSM tree mechanisms and provides read-your-writes consistency with milli-second latency and high throughput. While Kafka primarily handles append-only streams, the Kafka compacted topic only provides a weak update semantic that compact will keep at least one value for a key, not only the latest.

The fourth difference is the Lakehouse Architecture. Fluss embraces the Lakehouse Architecture. Fluss uses Lakehouse as a tiered storage, and data will be converted and tiered into data lakes periodically; Fluss only retains a small portion of recent data. So you only need to store one copy of data for your streaming and Lakehouse. But the true power of this architecture is it provides a union view of Streaming and Lakehouse, so whether it is a Kafka client or a query engine on Lakehouse, they all can visit the streaming data and Lakehouse data as a union view as a single table. It brings powerful analytics to streaming data users.

On the other hand, it provides second-level data insights for Lakehouse users. Most importantly, you only need to store one copy of data for your streaming and Lakehouse, which reduces costs. In contrast, Kafka's tiered storage only stores Kafka log segments in remote storage; it is only a storage cost optimization for Kafka and has nothing to do with Lakehouse.

The Lakehouse storage serves as the historical data layer for the streaming storage, which is optimized for storing long-term data with minute-level latencies. On the other hand, streaming storage serves as the real-time data layer for Lakehouse storage, which is optimized for storing short-term data with millisecond-level latencies. The data is shared and is exposed as a single table. For streaming queries on the table, it firstly uses the Lakehouse storage as historical data to have efficient catch-up read performance and then seamlessly transitions to the streaming storage for real-time data, ensuring no duplicate data is read. For batch queries on the table, streaming storage supplements real-time data for Lakehouse storage, enabling second-level freshness for Lakehouse analytics. This capability, termed Union Read, allows both layers to work in tandem for highly efficient and accurate data access.

Confluent Tableflow can bridge Kafka and Iceberg data, but that is just a data movement that data integration tools like Fivetran or Airbyte can also achieve. Tableflow is a Lambda Architecture that uses two separate systems (streaming and batch), leading to challenges like data inconsistency, dual storage costs, and complex governance. On the other hand, Fluss is a Kappa Architecture; it stores one copy of data and presents it as a stream or a table, depending on the use case. Benefits:

* Cost and Time Efficiency: no longer need to move data between system

* Data Consistency: reduces the occurrence of similar-yet-different datasets, leading to fewer data pipelines and simpler data management.

* Analytics on Stream

* Freshness on Lakehouse

When to use Kafka Vs. Fluss

Kafka is a general-purpose distributed event streaming platform optimized for high-throughput messaging and event sourcing. It excels in event-driven architectures and data pipelines. Fluss is tailored for real-time analytics. It works with streaming processing like Flink and Lakehouse formats like Iceberg and Paimon.

How do you compare Fluss with OLAP Engines like Apache Pinot?

Architecture: Pinot is an OLAP database that supports storing offline and real-time data and supports low-latency analytical queries. In contrast, Fluss is a storage to store real-time streaming data but doesn't provide OLAP abilities; it utilizes external query engines to process/analyze data, such as Flink and StarRocks/Spark/Trino (on the roadmap). Therefore, Pinot has additional query servers for OLAP serving, and Fluss has fewer components.

Pinot is a monolithic architecture that provides complete capabilities from storage to computation. Fluss is used in a composable architecture that can plug multiple engines into different scenarios. The rise of Iceberg and Lakehouse has proven the power of composable architecture. Users use Parquet as the file format and Iceberg as the table format, Fluss on top of Iceberg as the real-time data layer, Flink for streaming processing, and StarRocks/Trino for OLAP queries. Fluss in the architecture can augment the existing Lakehouse with mill-second-level fresh data insights.

API: The API of Fluss is RPC protocols like Kafka, which provides an SDK library, and query engines like Flink provide SQL API. Pinot provides SQL for OLAP queries and BI tool integrations.

Streaming reads and writes: Fluss provides comprehensive streaming reads and writes like Kafka, but Pinot doesn't natively support them. Pinot connects to external streaming systems to ingest data using a pull-based mechanism and doesn't support a push-based mechanism.

When to use Fluss vs Apache Pinot?

If you want to build streaming analytics streaming pipelines, use Fluss (and usually Flink together). If you want to build OLAP systems for low-latency complex queries, use Pinot. If you want to augment your Lakehouse with streaming data, use Fluss.

How is Fluss integrated with Apache Flink?

Fluss focuses on storing streaming data and does not offer streaming processing capabilities. On the other hand, Flink is the de facto standard for streaming processing. Fluss aims to be the best storage for Flink and real-time analytics. The vision behind the integration is to provide users with a seamless streaming warehouse or streaming database experience. This requires seamless integration and in-depth optimization from storage to computation. For instance, Fluss already supports all of Flink's connector interfaces, including catalog, source, sink, lookup, and pushdown interfaces.

In contrast, Kafka can only implement the source and sink interfaces. Our team is the community's core contributor to Flink SQL; we have the most committers and PMC members. We are committed to advancing the deep integration and optimization of Flink SQL and Fluss.

Can you elaborate on Fluss's internal architecture?

A Fluss cluster consists of two main processes: the CoordinatorServer and the TabletServer. The CoordinatorServer is the central control and management component. It maintains metadata, manages tablet allocation, lists nodes, and handles permissions. The TabletServer stores data and provides I/O services directly to users. The Fluss architecture is similar to the Kafka broker and uses the same durability and leader-based replication mechanism.

Consistency: A table creation will request CoordinatorServer, which creates the metadata and assigns replicas to TabeltServers (three replicas by default), one of which is the leader. The replica leader writes the incoming logs and replica followers fetch logs from the replica leader. Once all replicas replicate the log, the log write response will be successfully returned.

Fault Tolerance: If the TabletServer fails, CoordinatorServer will assign a new leader from the replica list, and it becomes the new leader to accept new read/write requests. Once a failed TabeltServer comes back, it catches up with the logs from the new leader.

Scalability: Fluss can scale up linearly by adding TabletServers.

How did Fluss implement the columnar storage?

Let’s start with why we need columnar storage for streaming data. Fluss is designed for real-time analytics. In analytical queries, it's common that only a portion of the columns are read, and a filter condition can prune a significant amount of data. This applies to streaming analytics, such as a Flink SQL query on Kafka data. For example, among the 20,000 Flink SQL jobs at Alibaba, only 49% of the columns of Kafka data are read on average. Still, you must read 100% of the data and deserialize all the columns.

We introduced Apache Arrow as our underlying log storage format. Apache Arrow is a columnar format that arranges data in columns. In the implementation, clients send Arrow batches to the Fluss server, and the Fluss server continuously appends the arrow batches into log files. When a read requests to read specific columns, the server returns the necessary column vectors to users, thus reducing networking costs and improving performance. In our benchmark, if you read only 10% columns, you will have a 10x increase in read throughput.

How Fluss manages Real-Time Updates and Changelog Management?

Fluss has 2 table types:

* Log Table

* Primary Key Table

Log table only supports appending data, just like Kafka topics. The primary key table has the primary key definition and thus supports real-time updates on the primary key. Log Table uses LogStore to store data in Arrow format in the storage model. Primary Key Table uses LogStore to store changelogs and KvStore to store the materialized view of the changelog. KvStore leverages RocksDB to support real-time updates. RocksDB is a key-value embedded storage engine based on the LSM tree; the key is the primary key, and the value is the row.

Write path: when an update request is to the TabletServer, it first looks KvStore for the previous row of the key, combines the previous row and new row as the changelog, writes the changelog to LogStore, and uses it as a WAL for KvStore recovery, then it writes the new row into KvStore. Flink can consume changelogs from the table's LogStore to process streams.

Partial updates: Look up the previous row of the key, merge the previous row and the new row on the update columns, and write back the merged row to KvStore.

How does Fluss handle high throughput and low latency?

Fluss achieves high throughput and low latency through a combination of innovative techniques. It utilizes end-to-end zero-copy operations, transferring data directly from the producer, through the network, to the server and filesystem, and back to the consumer without unnecessary data duplication. Data is processed in batches (defaulting to 1 MB), making the system latency-insensitive. Further efficiency is gained through zstd level 3 compression, reducing data size. Asynchronous writes allow multiple batches in transit simultaneously, eliminating delays in waiting for write confirmations. Finally, columnar pruning minimizes the amount of data transferred by only sending the necessary columns for a given query.

How is Fluss fault tolerance and data recovery work?

We utilize the same approach with Kafka, the synchronous replication, and the ISR (in-sync-replicas) strategy.

Recovery time: like Kafka, within seconds. But for the primary key table, it may take minutes as it has to download snapshots of RocksDB from remote storage.

What about the scalability of Fluss?

The Fluss cluster can scale linearly by adding TabletServers. A table can scale up throughput by adding more buckets (the partition concept in Kafka). We don't support data rebalancing across multiple nodes, but this is a work in progress.

What is the future roadmap for Fluss?

Fluss is undergoing significant lakehouse refactoring to enhance its capabilities and flexibility. This includes making the data lake format pluggable and expanding beyond the current Paimon support to incorporate formats like Iceberg and Hudi through collaborations with companies like Bytedance and Onehouse. Support for additional query engines is also being developed, with Spark integration currently in progress and StarRocks planned for the near future. Finally, to ensure seamless integration with existing infrastructure, Fluss is being made compatible with Kafka, allowing Kafka clients and tools to interact directly with the platform.

References:

https://www.alibabacloud.com/blog/introducing-fluss-streaming-storage-for-real-time-analytics_601921

All rights reserved ProtoGrowth Inc, India. I have provided links for informational purposes and do not suggest endorsement. All views expressed in this newsletter are my own and do not represent current, former, or future employers’ opinions.

This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit www.dataengineeringweekly.com