Jan 31 2025 11 mins 1

Seventy3: 用NotebookLM将论文生成播客,让大家跟着AI一起进步。

今天的主题是:

Don't Do RAG: When Cache-Augmented Generation is All You Need for Knowledge Tasks

Summary

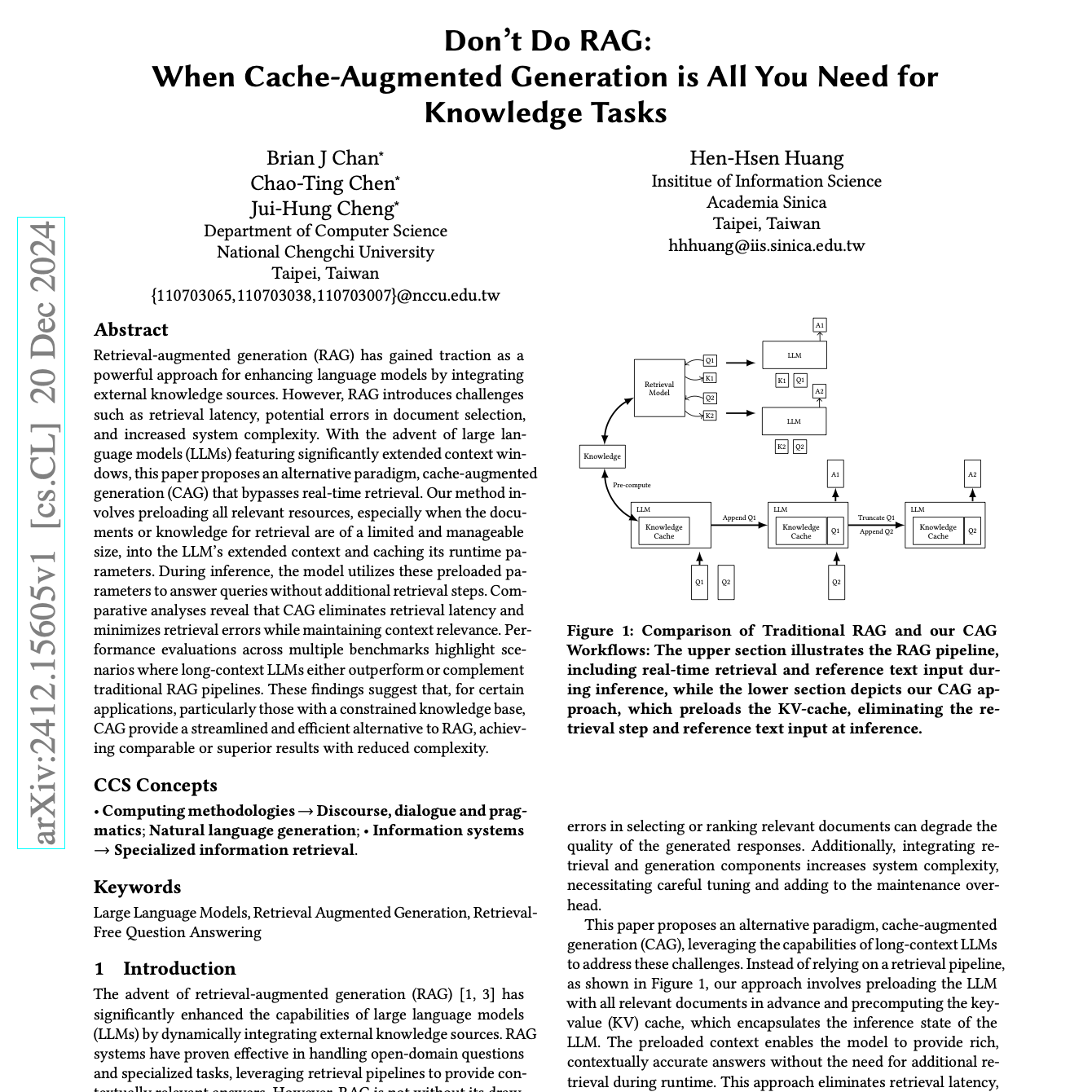

This research paper introduces cache-augmented generation (CAG) as a more efficient alternative to retrieval-augmented generation (RAG) for knowledge-intensive tasks. CAG preloads all relevant knowledge into a large language model (LLM), eliminating the need for real-time retrieval and its associated latency and errors. Experiments using SQuAD and HotPotQA datasets demonstrate CAG's superior performance and speed, especially when the knowledge base is manageable in size. The authors highlight the advantages of CAG's simplified architecture and improved efficiency, suggesting it as a robust solution for specific applications. The paper concludes by exploring potential hybrid approaches combining preloading with selective retrieval.

本文提出了缓存增强生成(cache-augmented generation, CAG),作为知识密集型任务中比检索增强生成(retrieval-augmented generation, RAG)更高效的替代方案。CAG 将所有相关知识预加载到大型语言模型(LLM)中,消除了实时检索的需求,避免了其相关的延迟和错误。通过在 SQuAD 和 HotPotQA 数据集上的实验,展示了 CAG 在性能和速度上的优越性,尤其在知识库规模可控的情况下。作者强调了 CAG 简化架构和提高效率的优势,建议其作为特定应用中的一种可靠解决方案。最后,论文探讨了结合预加载和选择性检索的潜在混合方法。

原文链接:https://arxiv.org/abs/2412.15605